You need actual data to work on data and web scraping is one of the ways you can collect data.

In this post, I write about a Python web scraper I coded that collects data from a fictional bookstore.

Resources used

For this project, I used the following Python libraries,

- Beautiful soup 4

- Pandas

- Requests

I followed Corey Schafer's Python web scraper tutorial but I tweaked the code a little and my code can be found on Github.

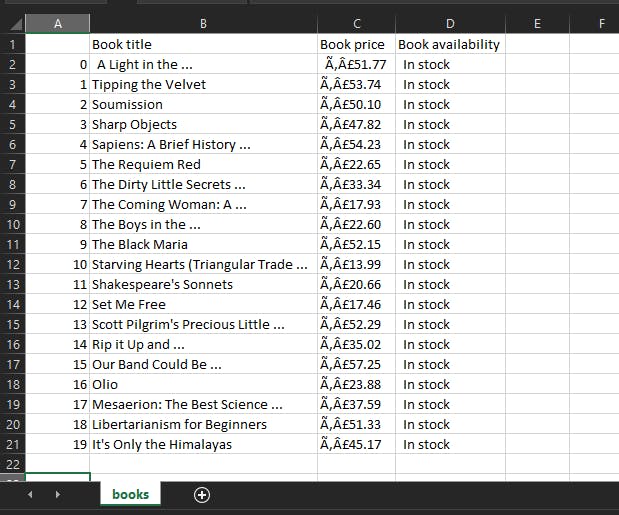

This is what the output looks like.

Knowledge gained

In the course of learning to code this web scraper, I learned that,

Web scrapers are used to extract data from websites while web crawlers are used to find links on the web.

Some websites are unfriendly to web scrapers. To check if a website blocks a web scraper, type "/robots.txt" after the URL. For example, to check if IMDB, a popular movie rating and review website, will block web scrapers,

imdb.com/robots.txt

The result

#robots.txt for https://www.imdb.com properties

User-agent: *

Disallow: /OnThisDay

Disallow: /ads/

Disallow: /ap/

Disallow: /mymovies/

Disallow: /r/

Disallow: /register

Disallow: /registration/

Disallow: /search/name-text

Disallow: /search/title-text

Disallow: /find

Disallow: /find$

Disallow: /find/

Disallow: /tvschedule

Disallow: /updates

Disallow: /watch/_ajax/option

Disallow: /_json/video/mon

Disallow: /_json/getAdsForMediaViewer/

Disallow: /list/ls*/_ajax

Disallow: /*/*/rg*/mediaviewer/rm*/tr

Disallow: /*/rg*/mediaviewer/rm*/tr

Disallow: /*/mediaviewer/*/tr

Disallow: /title/tt*/mediaviewer/rm*/tr

Disallow: /name/nm*/mediaviewer/rm*/tr

Disallow: /gallery/rg*/mediaviewer/rm*/tr

Disallow: /tr/

Disallow: /title/tt*/watchoptions

Disallow: /search/title/?title_type=feature,tv_movie,tv_miniseries,documentary,short,video,tv_short&release_date=,2020-12-31&lists=%21ls538187658,%21ls539867036,%21ls538186228&view=simple&sort=num_votes,asc&aft

User-agent: Baiduspider

Disallow: /list/*

Disallow: /user/*

Yeah, I don't think web scrapers are allowed. I suggest reading this article on Scrape.do to know how best to go about web scraping.

Static sites are easier to build a web scraper for than dynamic websites because information on dynamic websites is updated often.

Some big websites have APIs that let you scrape the websites like Tweepy, a Python web scraping tool for Twitter.

Beautiful Soup and Python's requests library can help you build a web scraper for a static site in minutes.

HTML parsers are used to analyze and convert web pages to HTML document trees. For web scraping, this makes accessing objects on the web page easier.

Web scraping is a fine way to collect data especially as the data can be converted to various formats using the right tools.

Have you built a web scraper before? Are my takeaways similar to yours?